Using Python and BeautifulSoup to extract all the useful information from GitHub repositories.

Share:

https://techconductor.com/blogs/python/using_beautifulsoup_and_python_to_extract_informations_from_github.php

Here BeautifulSoup and Python will be used to extract stars, watch, official urls, descriptions, and other useful information from top Github repositories list here. The list has top Deep Learning repositories.

So to fetch more information which are not present in the list like No. of Watchs, Official Url, and download link. The first thing we will do is fetch all the repositories links from the list and then make a GET request to each of the repo link and use BeautifulSoup to extract all the useful information from the response.

The code is similar to the projects here. Comments are added to explain functionality of each line.

Python Script:

import urllib.request

from bs4 import BeautifulSoup

try:

url='https://github.com/mbadry1/Top-Deep-Learning/blob/master/readme.md' #list url

headers = {}

headers['User-Agent'] = "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36"

req = urllib.request.Request(url, headers = headers)

resp = urllib.request.urlopen(req)

print("connection ok")

respData = resp.read()

resp.close()

print('Done')

soup = BeautifulSoup(respData, "lxml")

links = soup.find_all('a') #holds all the a tags present in the file above

for i in range(42, 43): #range from where the repo links starts is 42

try:

url_now = links[i].get('href') #holds the current repo link

headers_now = {}

headers_now['User-Agent'] = "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36"

req_now = urllib.request.Request(url_now, headers = headers_now)

resp_now = urllib.request.urlopen(req_now)

respData_now = resp_now.read()

resp_now.close()

soup_now = BeautifulSoup(respData_now, "lxml")

tmp = url_now[19:len(url_now)] #url of the repo after https://github.com/

watch = soup_now.select_one("a[href*="+tmp+"/watchers]") #holds the entire html of the a tag with href repo link/watchers

star = soup_now.select_one("a[href*="+tmp+"/stargazers]") #holds the entire html of the a tag with href repo link/stargazers

desc = soup_now.find("span",itemprop="about").get_text()

desc_ok = desc.encode('ascii', 'ignore').decode('ascii', 'ignore')

official = soup_now.find("span", itemprop="url")

if (official): #checks weather official url is empty or not

official_ok = str(official.a.get('href'))

else:

official_ok = 'None'

download = soup_now.find("a", class_="get-repo-btn")

download2 = download.find_next_siblings('a') #zip download link

star_n = star.get_text() #fetch no. of stars from star object

watch_n = watch.get_text() #fetch no. of watch from star object

#output all the fetched data

print(str(i-41)+' : '+ str(links[i].get_text())) #counter

print('Description: '+str(desc_ok.strip()))

print('Star : '+str(star_n.strip()))

print('Watch : '+str(watch_n.strip()))

print('Url : '+official_ok)

print('Download :'+str(download2[0].get('href')))

except Exception as e:

raise e

except Exception as e:

print(str(e))

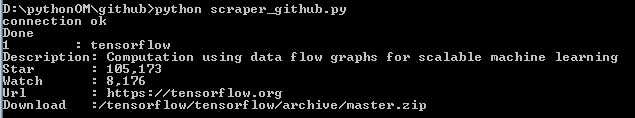

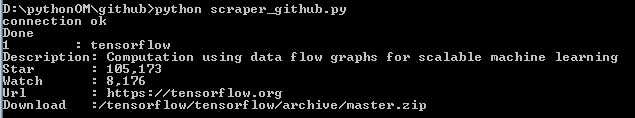

Result:

We can further use the information fetched to do different things. Here is a implementation of the above code with some modifications which makes a new list with all the new information fetched to built an HTML page Result: here.